Dbeaver Impala

The SHOW FILES statement displays the files that constitute a specified table, or a partition within a partitioned table. This syntax is available in Impala 2.2 and higher only. The output includes the names of the files, the size of each file, and the applicable partition for a partitioned table. The size includes a suffix of B for bytes, MB for megabytes, and GB for gigabytes.

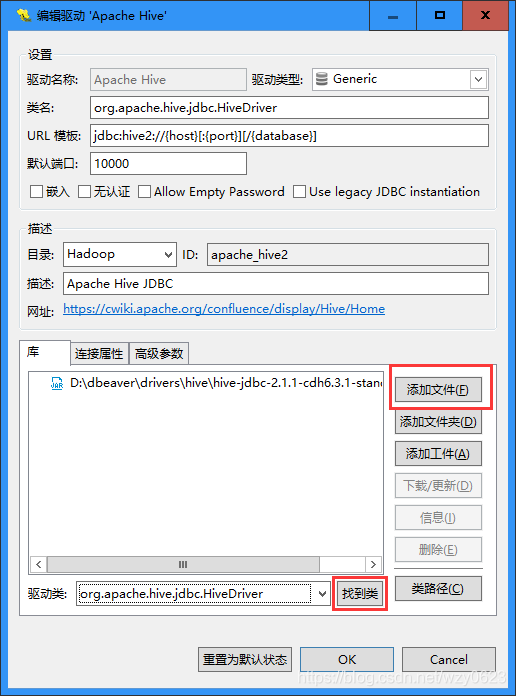

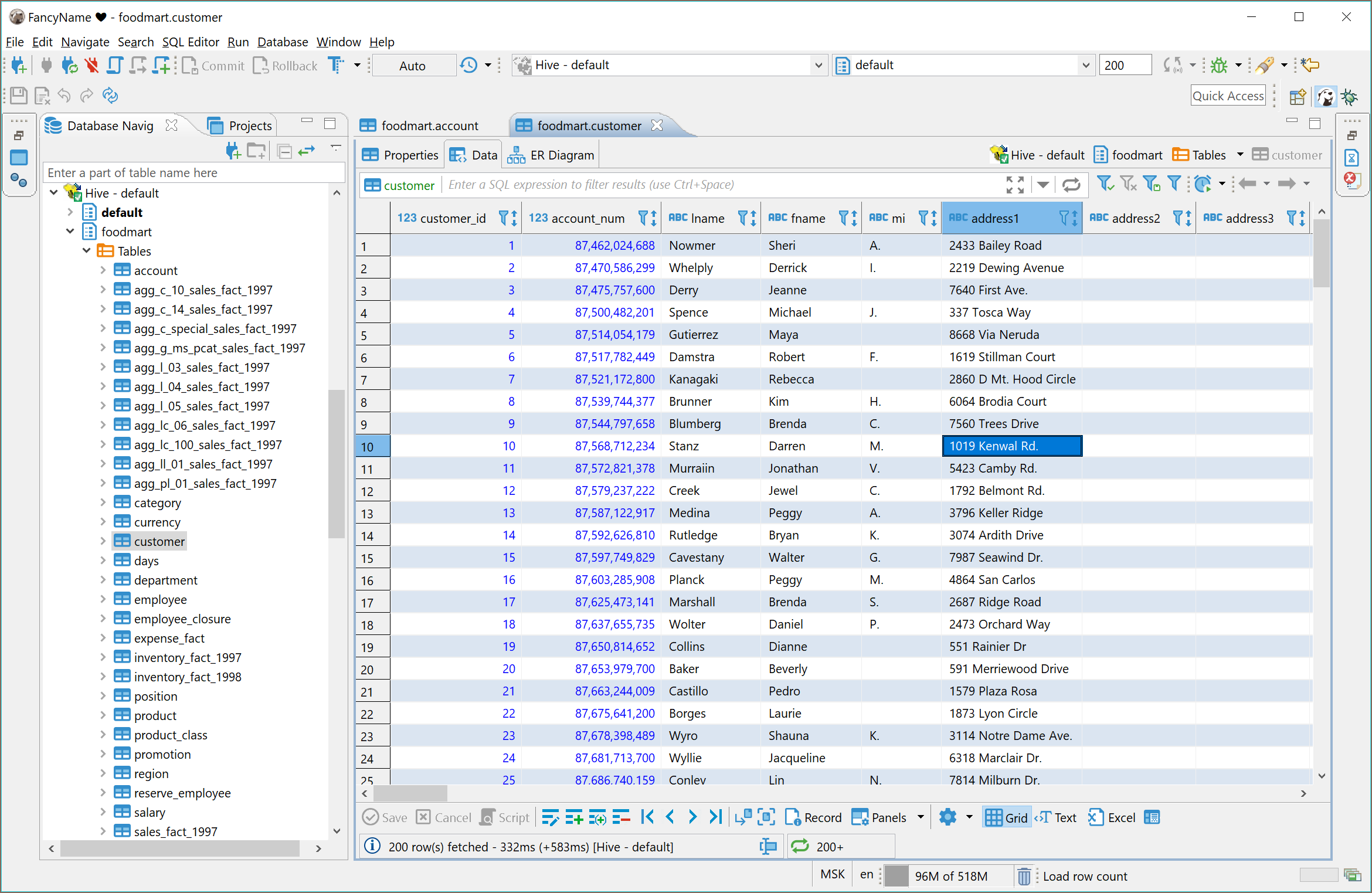

<, IN, LIKE, and BETWEEN in the PARTITION clause, instead of only equality operators. For example: Within an impala-shell session, you can only issue queries while connected to an instance of the impalad daemon. You can specify the connection information: Through command-line options when you run the impala-shell command.; Through a configuration file that is read when you run the impala-shell command.; During an impala-shell session, by issuing a CONNECT command. I am using impala2.12.0-cdh5.16.1 and connecting to impala with impalajdbc2.6.4.1005. Normally it runs very well, but when I run distcp (which cost the Cluster Network IO and HDFS IO), the java program may throw errors. 2019/02/28 12:73 ERROR run.QihooStatusTask(run:88) - ClouderaImpalaJDBCDriver(700100) Connection timeout expired. Hi All, I'm using zeppelin to run report using impala. I downloaded impala JDBC to my linux machine, when i'm looking at the impala queries at cloudera manager, i see my queries running with empty user ' '. I didn't integrate LDAP authnitication yet with Impala. I have 2 small questions: 1. 以下以 Dbeaver 为例,其他数据库连接工具,如 Dbvisualizer 、 PlSQL 等也可以用类似的方法连接,只要新建 DriverManager 并将 Impala的JDBC Jar包加载进来就可以这个方法也可以应用于其他支持JDBC连接的数据库,如Hive等下载Impala JDBC Connector并解压其中需要用的ClouderaImpalaJDBCxxx.x.x.x.z.

Usage notes:

You can use this statement to verify the results of your ETL process: that is, that the expected files are present, with the expected sizes. You can examine the file information to detect conditions such as empty files, missing files, or inefficient layouts due to a large number of small files. When you use INSERT statements to copy from one table to another, you can see how the file layout changes due to file format conversions, compaction of small input files into large data blocks, and multiple output files from parallel queries and partitioned inserts.

The output from this statement does not include files that Impala considers to be hidden or invisible, such as those whose names start with a dot or an underscore, or that end with the suffixes .copying or .tmp.

The information for partitioned tables complements the output of the SHOW PARTITIONS statement, which summarizes information about each partition. SHOW PARTITIONS produces some output for each partition, while SHOW FILES does not produce any output for empty partitions because they do not include any data files.

Dbeaver Impala Parts

HDFS permissions:

Dbeaver Cloudera Impala

Mac update for flash player. The user ID that the impalad daemon runs under, typically the impala user, must have read permission for all the table files, read and execute permission for all the directories that make up the table, and execute permission for the database directory and all its parent directories.

Examples:

The following example shows a SHOW FILES statement for an unpartitioned table using text format:

Dbeaver Impalas

This example illustrates how, after issuing some INSERT .. VALUES statements, the table now contains some tiny files of just a few bytes. Such small files could cause inefficient processing of parallel queries that are expecting multi-megabyte input files. The example shows how you might compact the small files by doing an INSERT .. SELECT into a different table, possibly converting the data to Parquet in the process: Turbocad for mac.

The following example shows a SHOW FILES statement for a partitioned text table with data in two different partitions, and two empty partitions. The partitions with no data are not represented in the SHOW FILES output.

Dbeaver Impala For Sale

The following example shows a SHOW FILES statement for a partitioned Parquet table. The number and sizes of files are different from the equivalent partitioned text table used in the previous example, because INSERT operations for Parquet tables are parallelized differently than for text tables. (Also, the amount of data is so small that it can be written to Parquet without involving all the hosts in this 4-node cluster.)

The following example shows output from the SHOW FILES statement for a table where the data files are stored in Amazon S3:

- Dark theme support was improved (Windows 10 and GTk)

- Data viewer:

- Copy As: format configuration editor was added

- Extra configuration for filter dialog (performance)

- Sort by column as fixed (for small fetch sizes)

- Case-insensitive filters support was added

- Plaintext view now support top/bottom dividers

- Data editor was fixed (when column name conflicts with alias name)

- Duplicate row(s) command was fixed for multiple selected rows

- Edit sub-menu was returned to the context menu

- Columns auto-size configuration was added

- Dictionary viewer was fixed (for read-only connections)

- Current/selected row highlighting support was added (configurable)

- Metadata search now supports search in comments

- GIS/Spatial:

- Map position preserve after tiles change

- Support of geometries with Z and M coordinates was added

- Postgis: DDL for 3D geometry columns was fixed

- Presto + MySQL geometry type support was added

- BigQuery now supports spatial data viewer

- Binary geo json support was improved

- Geometry export was fixed (SRID parameter)

- Tiles definition editor was fixed (multi-line definitions + formatting)

- SQL editor:

- Auto-completion for objects names with spaces inside was fixed

- Database objects hyperlinks rendering was fixed

- SQL Server: MFA (multi-factor authentication) support was added

- PostgreSQL: array data types read was fixed

- Oracle: indexes were added to table DDL

- Vertica: LIMIT clause support was improved

- Athena: extra AWS regions added to connection dialog

- Sybase IQ: server version detection was improved

- SAP ASE: user function loading was fixed

- Informix: cross-database metadata read was fixed

- We migrated to Eclipse 2021-03 platform